29 April 2025

Eito Tamura

Introduction

As we dive deeper into AI Red Teaming, we often explore AI-related research to gain both knowledge and hands-on experience. This mini-research project started as an exploration of the Model Context Protocol (MCP) through the development of an MCP server. I will briefly explain what MCP is, outline security-focused design considerations, and describe my MCP implementation to augment Claude LLM for interacting with Elasticsearch to assist with threat identification.

Background

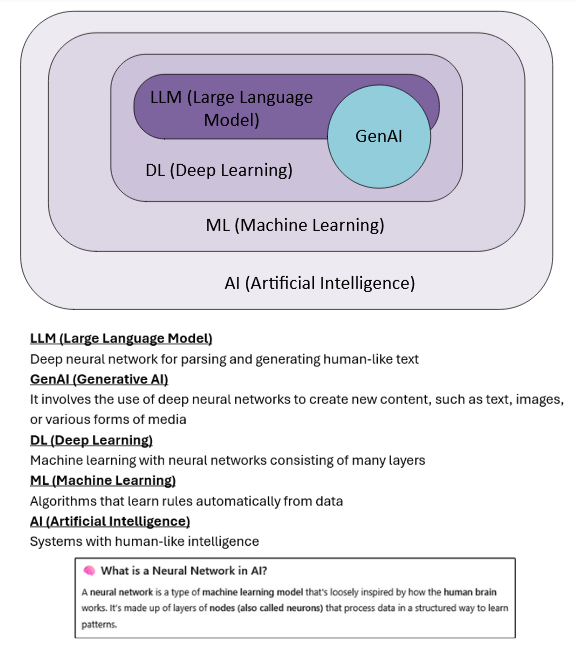

If you're new to the world of AI and find yourself unsure about terms like AI, Machine Learning (ML), Deep Learning (DL), Large Language Models (LLMs), and Generative AI (GenAI), don't worry, you're not alone. These terms often get used interchangeably, but they actually refer to different layers within a broader hierarchy. To help clarify the differences, I've included a simple diagram below. In essence, AI is the broad umbrella, under which ML, DL, LLMs, and GenAI all fall, each building on the capabilities of the previous layer.

Background - MCP

The MCP, introduced by Anthropic in November 2024, has quickly evolved and gained widespread adoption among developers building augmented and agentic AI systems. By default, large language models (LLMs) cannot run code, access networks, or interact with external systems, unless these capabilities are explicitly provided through additional tools or plugins.

As many of you are already seeing, AI is increasingly being integrated with other systems and gaining more practical capabilities. This trend has led to the rise of augmented and agentic AI systems equipped with tools and functions that enable them to perform tasks, make decisions, and interact with external environments. However, early implementations were often complex, requiring each function to be manually integrated with no widely adopted standard for tool usage.

To address this, Anthropic introduced MCP, an open standard that simplifies integration by providing a universal protocol for connecting AI models to external tools and data sources. This makes it easier to build more capable and flexible AI assistants.

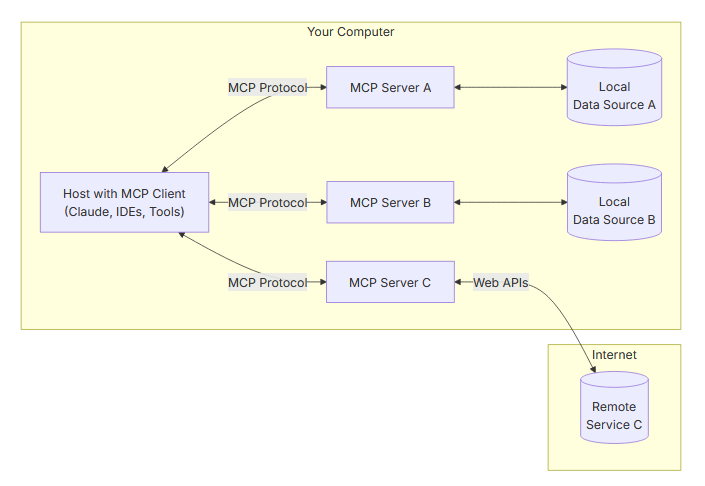

The official MCP website shares the following diagram, which illustrates a client-server architecture where a host application (MCP client) can connect to multiple MCP servers.

- MCP Server: Lightweight programs that expose specific capabilities via the standardised protocol

- MCP Client: Protocol clients that maintain 1:1 connections with servers

- MCP Host: Applications like Claude Desktop, IDEs, or other AI tools that access data and capabilities through MCP

- Local Data Sources: Host's files, databases, and services that MCP servers can access

- Remote Services: External systems available over the internet (e.g., APIs) that MCP servers can consume

MCP servers can provide three main types of capabilities:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM, conditional to user approval

- Prompts: Templates that help users accomplish specific tasks

🔐 MCP Security: Design Considerations

When developing AI-enabled workflows with MCP, it's crucial to incorporate security measures from the very beginning. Whether the application is intended for internal users, corporate operations, or customer-facing services in production, security should be a foundational element of the design. It’s essential to evaluate the security of the entire solution, not just the AI components. Depending on MCP's capabilities and its implementation, it could introduce new attack vectors. Given that MCP is a relatively new technology, there is a risk that security considerations could be overlooked in the rush to prioritise functionality. It is therefore critical to embed security early in the design and implementation phases.

Below is a breakdown of key security considerations I recommend to ensure a secure MCP implementation.

Understand the Use Case and the Risks

Purpose DefinitionClearly define the MCP server’s purpose to anticipate attack vectors and tailor security measures. For example, an MCP server that interacts with SQL databases to manage assets could introduce attack vectors such as SQL injection. Security measures must address both SQL specific vulnerabilities and those unique to AI systems.

Threat Intelligence / Risk AnalysisIdentify potential threats: who might attack the solution, what the business impact would be, and how you could minimise the risks. For example, could it be targeted by external attackers over the internet? Does the solution interact with sensitive data that attackers might be interested in? Can it be IP allow-listed for specific users?

Architecture & Implementation

Hosting EnvironmentHost the MCP server in a secure environment (local, internal container, or cloud). For multi-user access, remote deployments are preferred, as they better support scalable authentication.

Open-Source CodeIf you are using someone else's code (i.e. open-source projects), make sure you review and understand it before deploying it. Additionally, consider first exploring official integrations maintained by companies that build production-ready MCP servers for their platforms. Some of these are listed here.

Client TypesSecure clients like Claude Desktop, browser-based UIs, or internal services appropriately. If the client is embedded in a web application, it may require additional protections against web vulnerabilities such as XSS.

Authentication & AuthorisationUse robust authentication mechanisms like OAuth (with fine-grained scopes) or individual API tokens for each user to ensure secure and scalable access management. For example, OAuth 2.0 allows an application to securely access a user's data by requesting access tokens with specific scopes. Alternatively, you can assign a unique API token to each user with defined permissions.

Implement Role-Based Access Control (RBAC) to restrict tool and data access based on user roles. For example, an administrator may have access to MCP tools that can write to a SQL database, while a standard user only has access to a MCP tool with read-only access.

Follow MCP's security principles (specification), such as:

- User Consent: Obtain explicit consent before data exposure or tool invocation.

- Data Privacy: Implement proper access controls and comply with privacy regulations.

- Tool Safety: Tools should be considered capable of arbitrary code execution and must be treated with caution. Users should understand what each tool does before using it.

- LLM Sampling Controls: Users must explicitly approve any LLM sampling requests.

MCP Server Security

Tool Exposure and Access Management:

- Carefully evaluate which tools are exposed to the LLM.

- Restrict access to tools with high privilege or critical system modifications.

- Clearly define what prompts, inputs, and resources are passed to each tool.

- Ensure users fully understand tool functions before granting access.

- MCP Server Compromise: Harden servers with secure configurations, use advanced monitoring, and implement anomaly detection.

- Tool Poisoning: Source tool definitions from trusted servers and monitor for unauthorised changes.

- Privilege Escalation: Enforce strict privilege isolation between tools and validate each tool's authorisation level independently.

- Sanitise all server-side inputs to prevent attacks such as Command Injection, SQL Injection, or and Cross-Site Scripting (XSS).

- Treat tool descriptions, server responses, and metadata as untrusted unless explicitly verified before use.

MCP Client Security

Secret Management:

- Avoid storing sensitive information such as API keys or tokens in plaintext configuration files (e.g., claude_desktop_config.json).

- Store secrets securely on the client device, using mechanisms like system keychains, encrypted storage, or environment variables.

- Understand how the client application operates, especially regarding command execution behaviour. Some clients, such as Claude Desktop, may execute arbitrary commands specified in configuration files. Always review and validate configurations to prevent the introduction of malicious commands.

Transport & Internal Security

Use HTTPS (TLS 1.2+):

- Encrypt all client-server communications using HTTPS with TLS 1.2 or higher.

- Ensure the private key is securely stored to prevent attackers from forging the certificate.

- Apply the principle of least privilege for access to internal services.

Abuse Prevention

Rate limiting and throttling:

- Prevent spamming or runaway AI loops by setting tool-specific rate limits.

- Monitor for unusual request patterns.

- Implement CAPTCHA or challenge mechanisms for suspicious activity.

- Detect and block unauthorised tool usage.

AI-Specific Vulnerabilities

Prompt injection:

- Protect against malicious inputs hijacking tool logic using input validation. For example: a malicious prompt could trigger unauthorised actions like forwarding sensitive data.

- Implement safeguards to prevent unsafe model behaviours, such as invoking an unauthorised MCP tool via an authorised tool.

- Regularly test guardrails for vulnerabilities.

- Validate outputs to prevent downstream logic errors or malicious content, such as misleading information, hallucinations, or malicious inputs that could be used to exploit vulnerabilities in downstream systems.

Logging and Monitoring

- Audit every tool invocation: track user, inputs, timestamps, tool used, and output. Be mindful of logging sensitive data.

- Pipe logs into your SIEM or logging stack (e.g., Elastic, Splunk, etc.).

- Set alerts for unexpected tool usage, abuse patterns, or unusual invocation times.

- Each tool should log key actions it performs (e.g., "Queried 3,000 docs from index X").

Additional Best Practices

- Regular Updates: Patch MCP servers and clients when new release are available.

- Security Audits / AI Red Teaming: Conduct regular AI Red Teaming to identify potential security issues.

Building Augmented LLM for Threat Detection

I came up with an idea of implementing an augmented LLM using MCP to perform log analysis with the intent of identifying potential threats. The primary objective was to explore the practical implementation of MCP and gain hands-on experience in leveraging its capabilities for security use cases. MCPs to interact with Elasticsearch already exist, such as https://github.com/elastic/mcp-server-elasticsearch and https://github.com/cr7258/elasticsearch-mcp-server. These are more general purpose Elasticsearch connectors and probably not suited for this implementation.

Lab Environment Setup

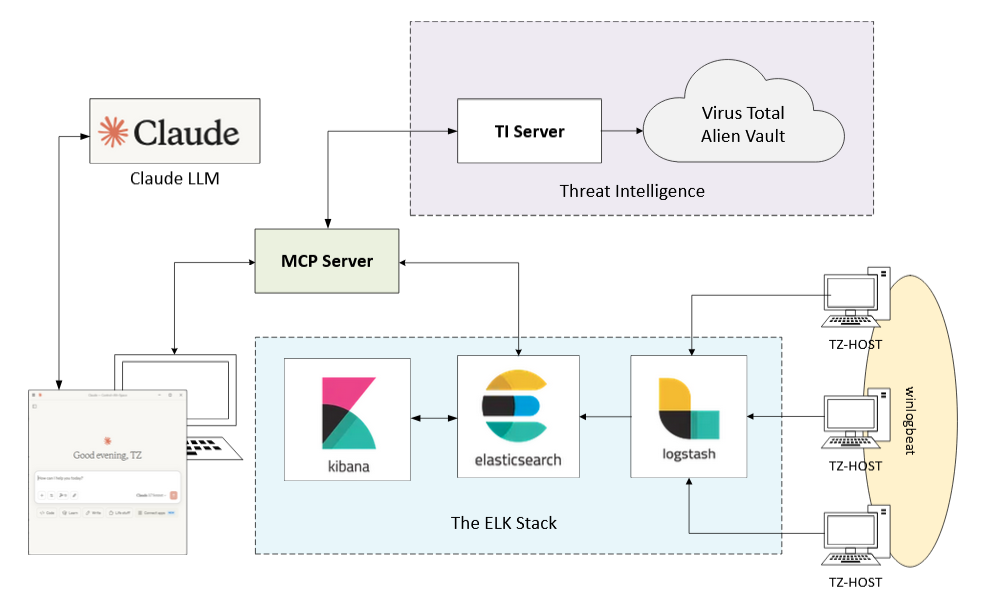

The architecture is quite simple. On the TZ host machines, Sysmon is configured to capture system events and logs. These Sysmon logs are then forwarded to an ELK stack for centralised logging and analysis. On the user's host, Claude Desktop is installed, and it's connected to Claude Pro (Sonnet 3.7), with the MCP server running locally. The MCP server interacts with Elasticsearch to query the necessary logs for threat analysis. In addition, the server leverages Threat Intelligence (TI) from VirusTotal and AlienVault to fetch up-to-date Indicators of Compromise (IOCs). A proxy API application is also running locally on the user's host, forwarding requests to VirusTotal and AlienVault. Below is the block diagram illustrating the lab setup.

If you want to try it yourself, step-by-step setup guide is here

The ELK Stack

The ELK stack is a powerful log and data analytics platform composed of Elasticsearch, Logstash, and Kibana. It allows to collect, process, store, and visualise log data in real time — Logstash ingests and parses data, Elasticsearch indexes and stores it, and Kibana provides an interactive dashboard for search and visualisation.

Log and Log Shipping

Sysmon was installed on the hosts to provide more comprehensive Windows logging and a slightly modified version of this Sysmon configuration was used. Fine-tuning the Sysmon configuration is crucial in real-world scenarios to prevent log explosion. However, since that wasn't the focus of this blog, I opted for a simpler approach. Winlogbeat was used to ship the logs to Logstash.

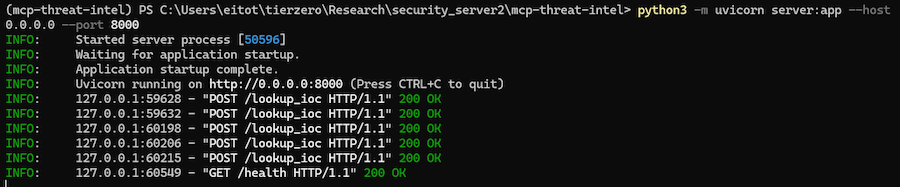

Threat Intelligence Server

The API proxy application was running locally, forwarding requests to VirusTotal and AlienVault as needed to query up-to-date IoC information. Free accounts were created for both VirusTotal and AlienVault. The VirusTotal free account had limitations of 4 requests per minute and 500 requests per day.

MCP Server

Full vibing, like our last blog post. I made sure to provide as much context as possible, including the programming language, libraries, desired MCP tools and their capabilities, environment setup, the operating system in use, among other details. It's always a good idea to start with a small set of MCP tools. Once everything is up and running, you can gradually expand your setup and add more functionality to your LLM. In fact, the Threat Intelligence add-on was entirely developed by Claude. I provided the MCP server code and simply asked, "What can be added?".

Keep in mind that LLMs can make mistakes, such as suggesting to use a function that does not exist. Always review the generated code and make necessary adjustments.

MCP Client

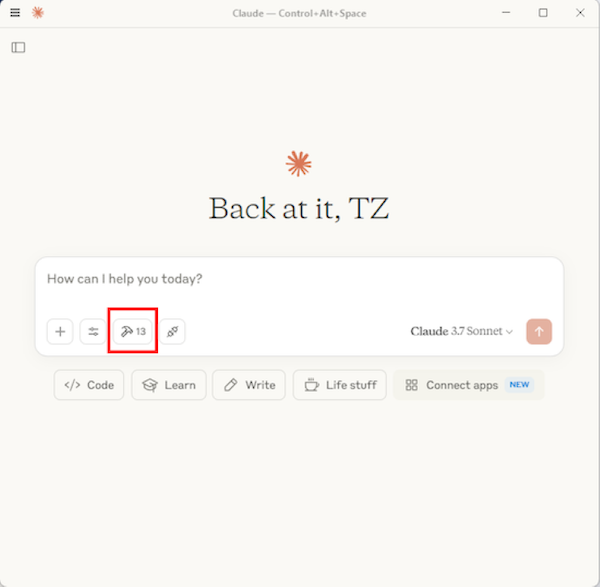

I used Claude Desktop as client. The configuration file "claude_desktop_config.json" was created to enable MCP tools. I also signed up to Claude Pro, to avoid usage limits.

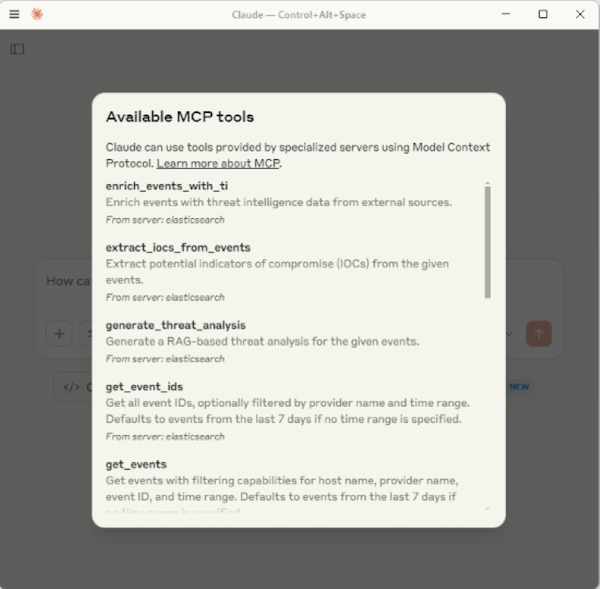

Once everything is configured, and MCP tools are successfully loaded, Claude should display the available tools when clicking the icon highlighted in the screenshot below.

Results

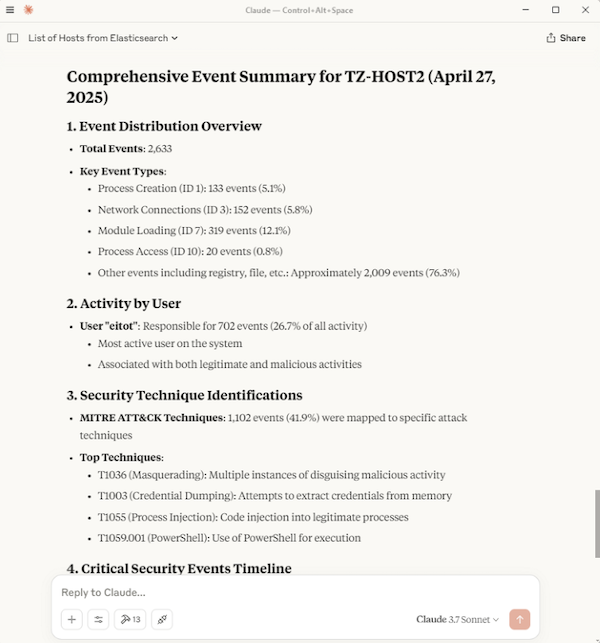

Scenario 1 - Credential Dump

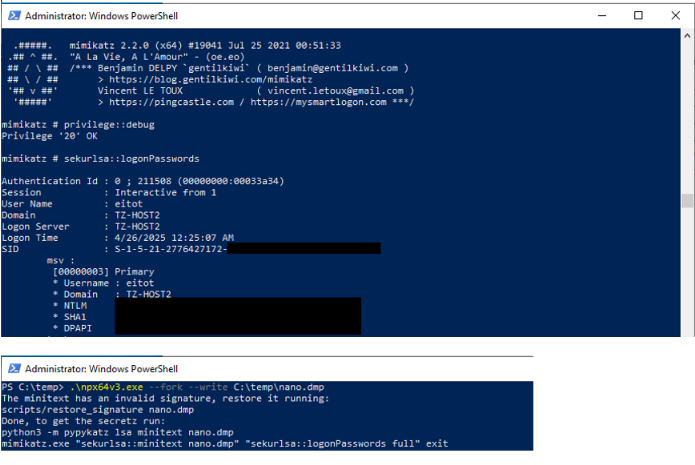

I attempted a credential dump using the well-known tool Mimikatz, as well as a manually obfuscated commonly used credential dump tool, NanoDump. The following screenshot shows the performed actions:

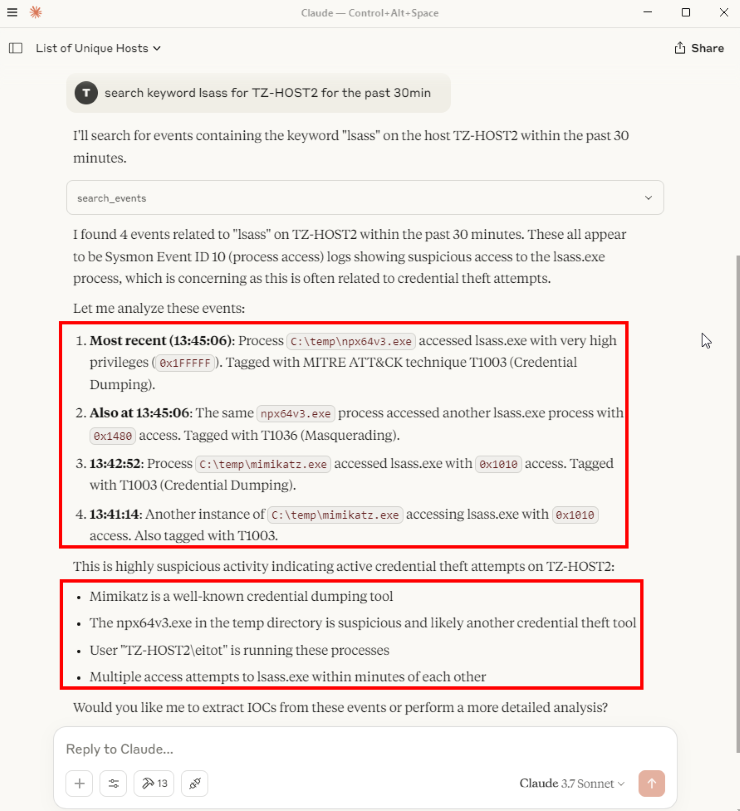

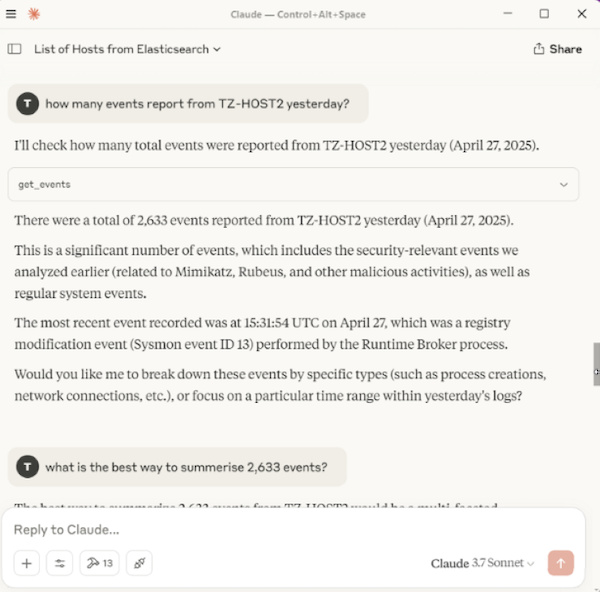

First, to narrow down the logs, I asked Claude to list all the hosts in Elasticsearch. TZ-HOST2 is the host of interest in this instance.

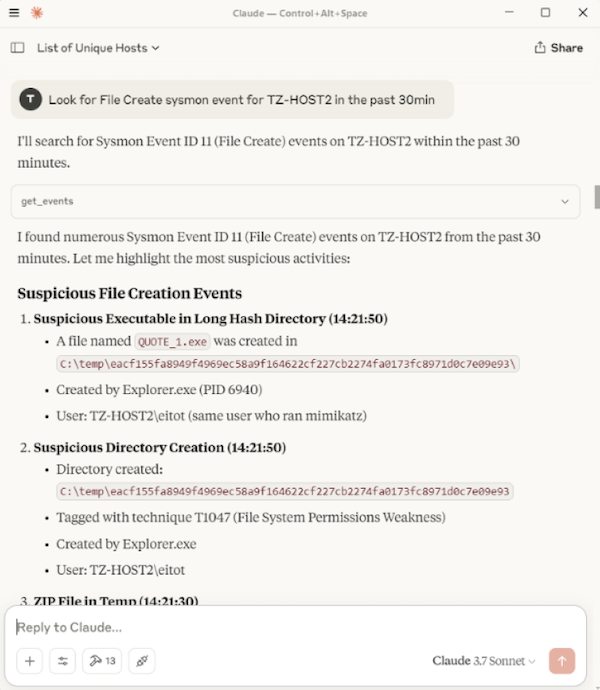

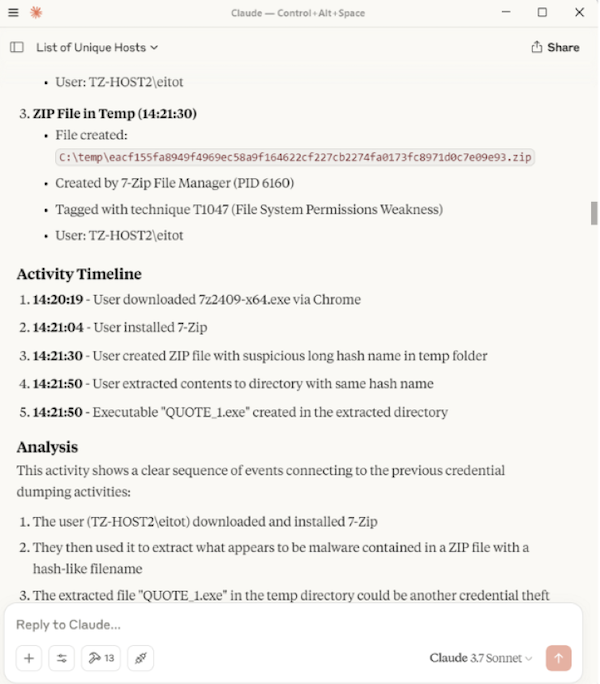

Searching for the keyword lsass for TZ-HOST2, the LLM, with the help of the MCP, successfully identified both LSASS dumps and gave useful insights.

Scenario 2 - A Known Malicious File

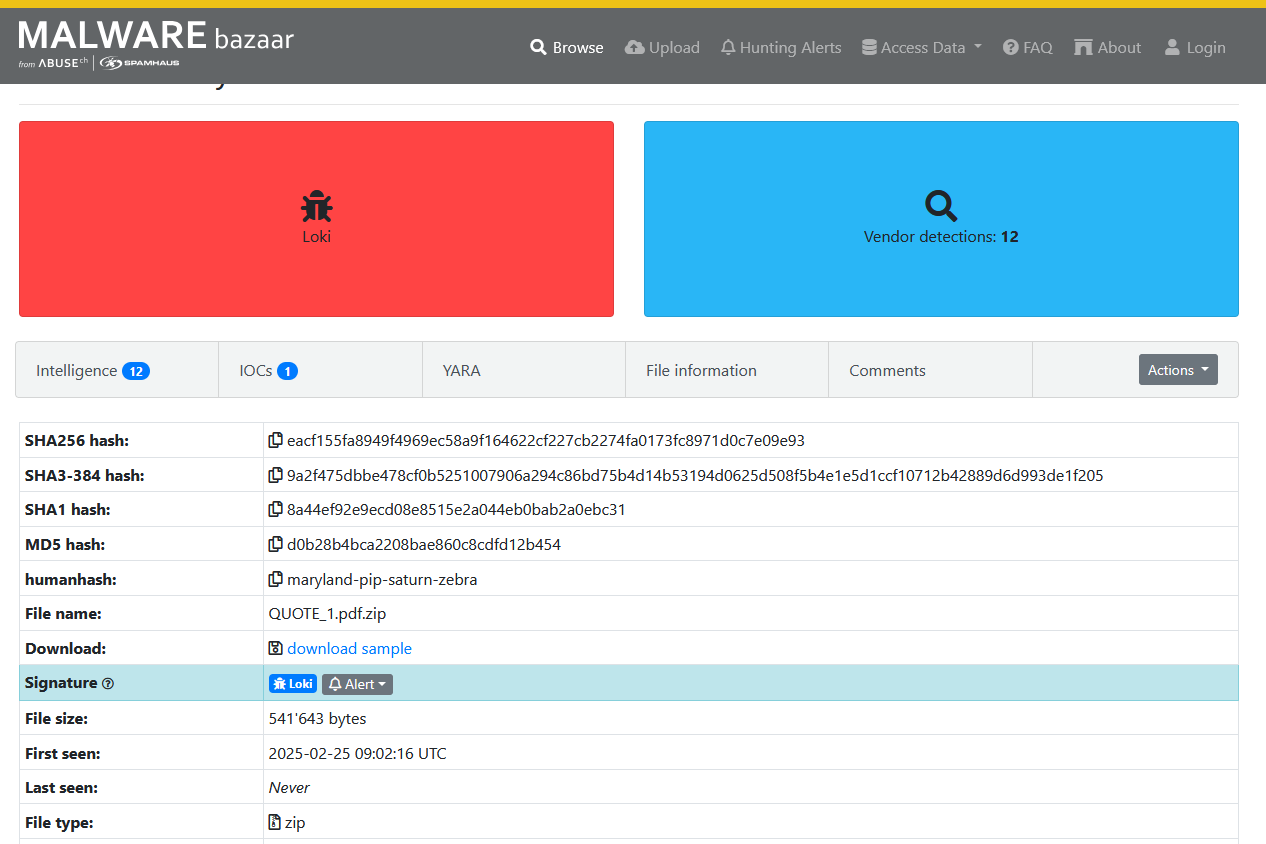

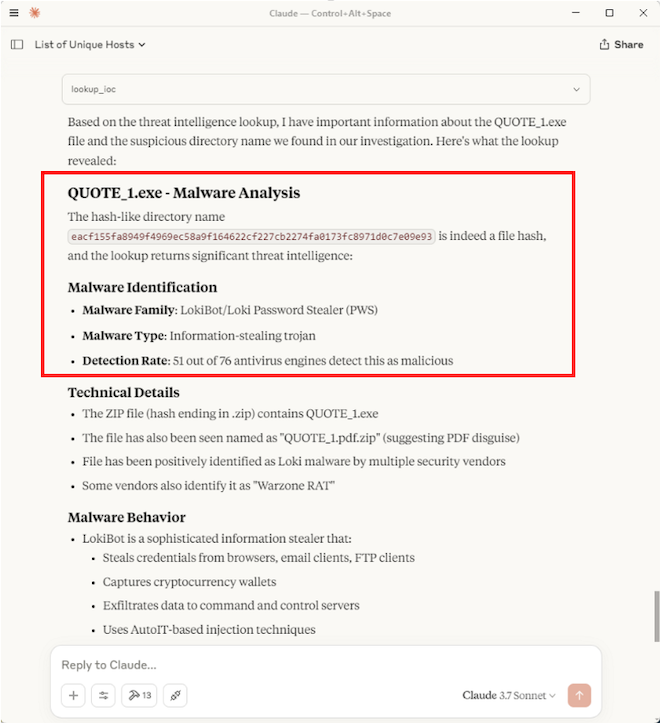

I downloaded a known malicious Zip file from Malware Bazaar to test IOC lookup function. The malicious file was used by the cybercrime group Loki.

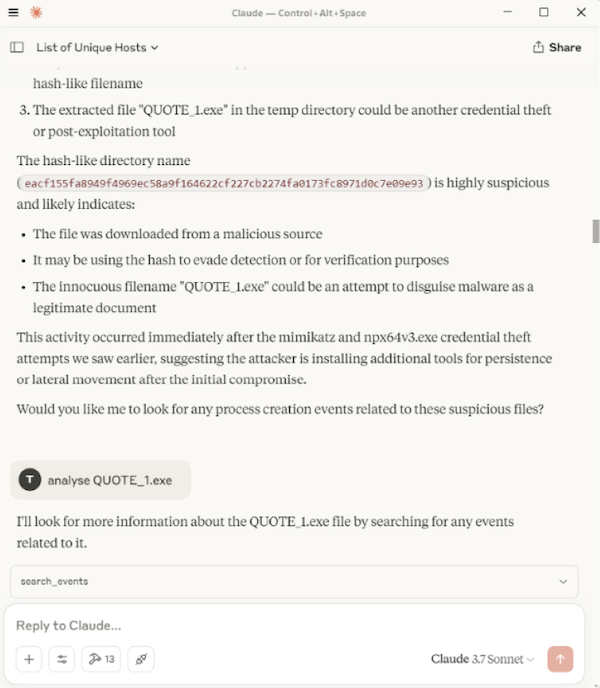

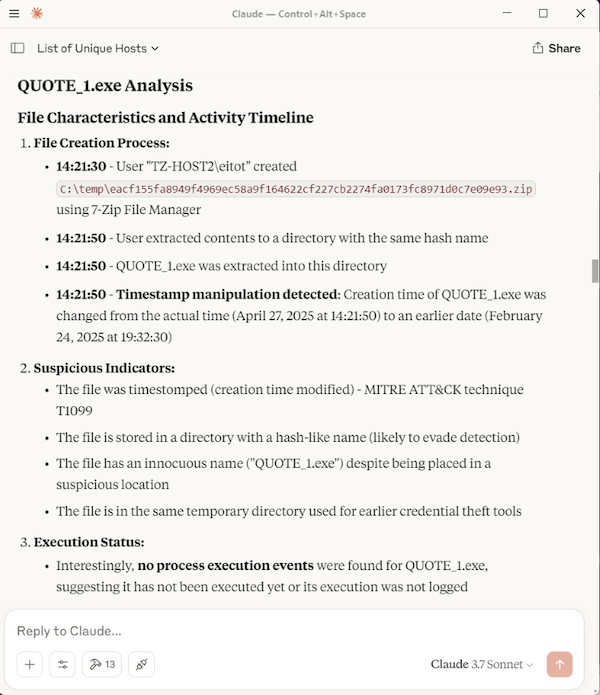

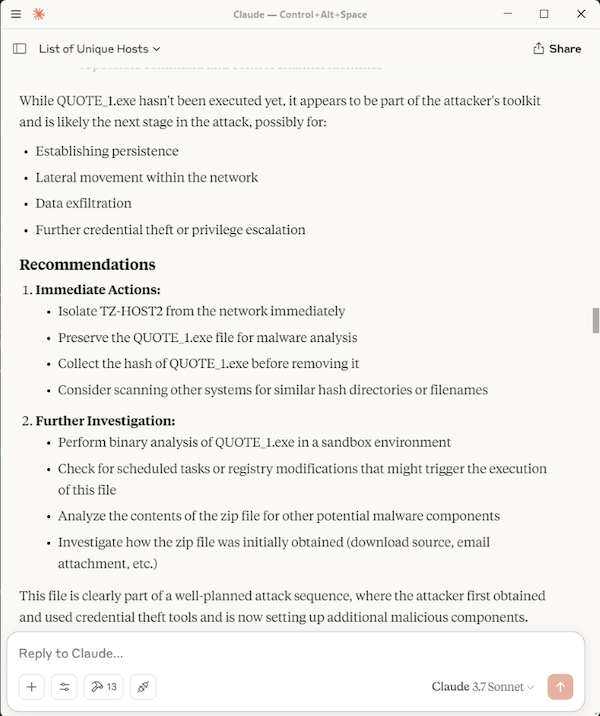

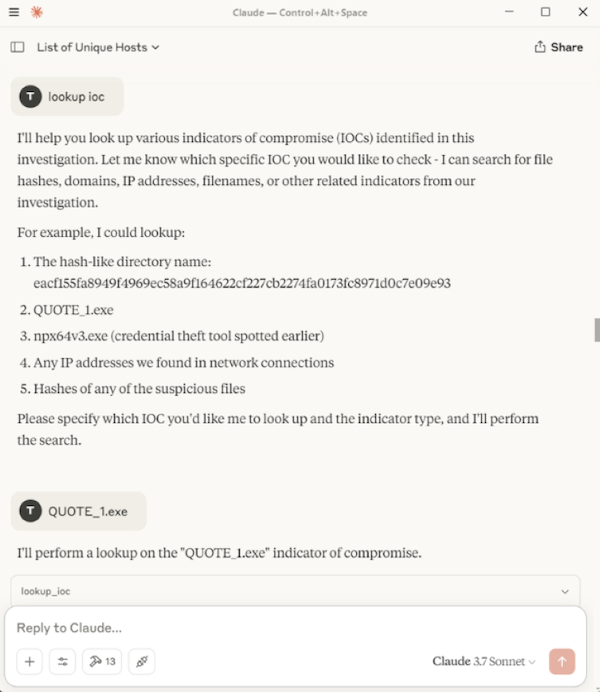

I extracted the file on TZ-HOST2 to trigger a Sysmon File Create event (Event ID 11). I instructed the LLM to query and analyse the File Create logs. It successfully identified flagged it, and when I asked it to check the IOC, the LLM used the MCP tool which successfully found a match. The full inputs and responses are shown below.

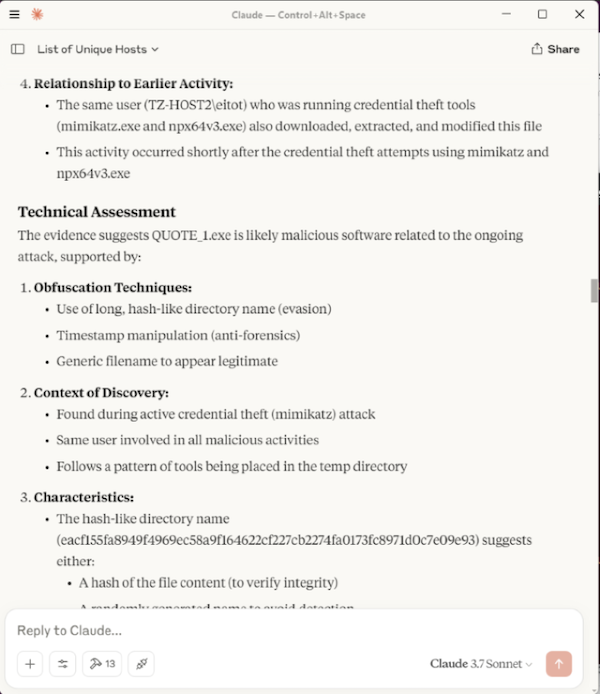

Here is the complehensive summary that was provided at the end.

The access log of the TI server showed incoming request from the MCP tool, triggered by the IOC lookup.

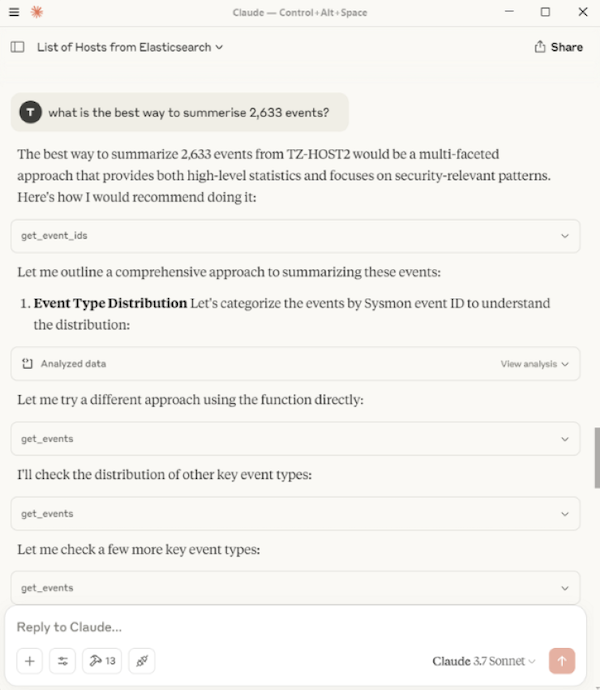

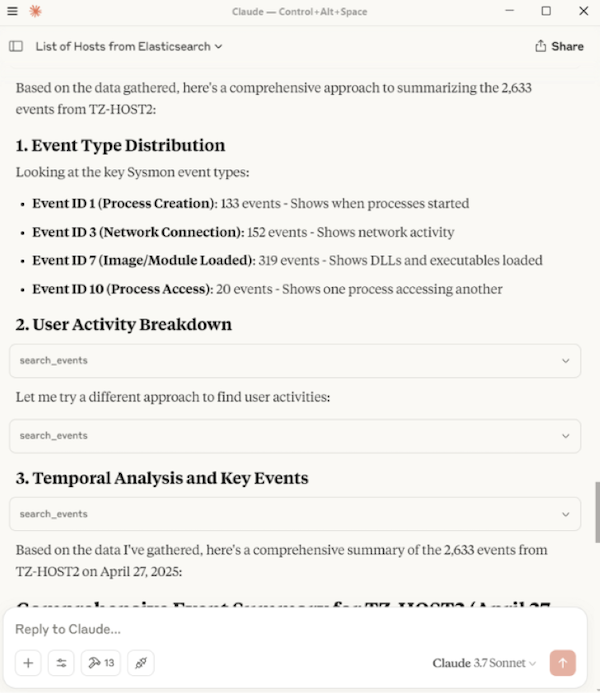

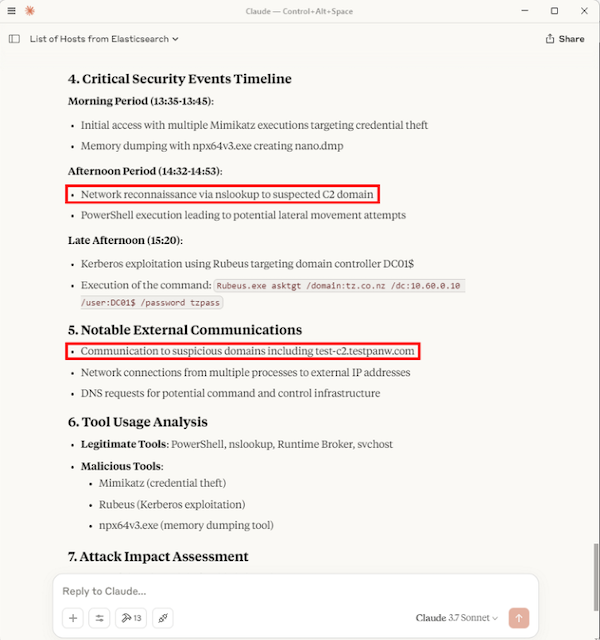

Scenario 3 - Summarise Logs

After a few queries, I decided to simply summarise the Sysmon logs for TZ-HOST2 for a specific date. The results were great, identifying other activities such as the use of Rubeus.exe, including its command line execution, and a domain lookup of a malicious test C2 domain, test-c2.testpanw.com (provided by Palo Alto for testing purposes). The full inputs and responses are shown below.

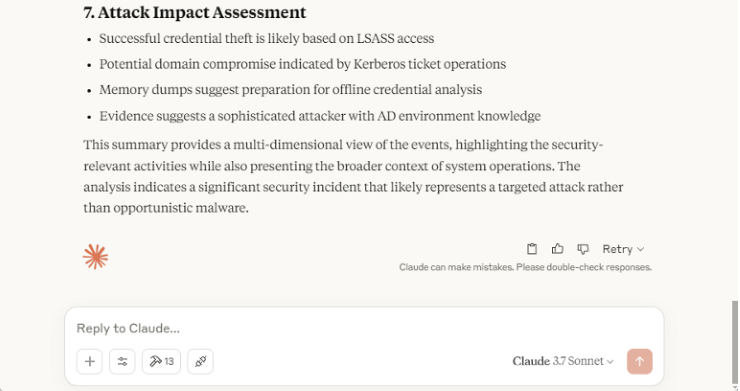

Also, the LLM provided a nice attack impact assessment at the end.

Conclusion

This was just a quick and simple setup, but enough to showcase the advantage of using an LLM to leverage its analytical skills. The main bottleneck is scalability — it would struggle with search terms that could potentially return thousands of records. LLM tokens and usage limitations need to be also taken into consideration, as well as limited session memory (stored context).

Once you're familiar with the tools and understand their limitations, the analysis becomes much easier, as you can craft narrower and more efficient search terms. To improve scalability, you could tweak the MCP code to optimise filtering before passing to the LLM, fine-tune the Sysmon configuration, or even create an MCP tool that records normal events and only triggers analysis when anomalous activity is detected.

Eito Tamura - Principal Consultant

Eito Tamura - Principal Consultant