03 April 2025

Claudio Contin

Introduction

Artificial Intelligence (AI), generative AI, and large language models (LLMs) are becoming increasingly prevalent in our daily lives. Organisations are beginning to integrate LLMs into their processes, applications, customer support, help-desk and security operations.

Tier Zero Security have been developing expertise in AI security, including AI red teaming, understanding models training, guardrails, and experimenting with AI red teaming tooling such as Microsoft PyRIT and PyRIT-Ship. Additionally, we have been evaluating LLMs using plugins (tools) with frameworks like nerve and various Model Context Protocols (MCP).

While researching, we evaluated options to use LLMs to try automating some of our workflows. For this post, we have explored ways to use an LLM to answer questions related to the New Zealand Information Security Manual (NZISM), and Linux hardening configuration best practices.

The overall idea was being able to feed the model with current, up to date and structured information. Also, we wanted to ensure none of the data was being sent to LLM third-party API services, such as OpenAI.

Options

Of course, to query public information, such as NZISM controls, using publicly available models, including OpenAI, Google Gemini, etc., is always an option.

For this research, we focused on ensuring that:

- No data was sent outside our environment

- We could feed custom up-to-date data for the LLM to fetch and use it to compose responses

- The LLM would only stick to relevant responses avoiding hallucinations by

guessingthe right answers

One option we considered was to take an existing model and train it further with the data, but we quickly discarded that option, since one of our requirements was to ensure we could feed the model up-to-date data at any time, without needing to touch the model itself.

Note that if the data does not often change, fine-tuning a model might be a better option for more accurate, faster and specialised language style responses.

To run an LLM model locally we decided to use Ollama, which also allows to download multiple publicly available models, including ones that support embeddings and tools.

For the LLM model, we chose to use dolphin3 (8B parameters). We have tried a few other models, but decided to stick to this one. From our initial tests, larger models did not seem to make much difference. Also, an 8B parameters can be run on modern consumer hardware, without necessarily needing dedicated GPUs (for tests).

Retrieval-Augmented Generation (RAG)

The alternative of fine-tuning a model is to use Retrieval-Augmented Generation (RAG). The idea is to feed an embedding model with the data, which gets stored in a vector database (Vector DB - collection of data stored as mathematical representations).

When a new user prompt is sent to the LLM, the embedding model fetches relevant data based on the prompt from the Vector DB, and feeds the results to the LLM together with the original prompt.

The LLM is then able to contextualise the original user prompt with the vectorised data to provide a relevant response.

For our first test we tried an open source tool named Verba, developed by Weaviate.

The tool allows to configure the LLM API endpoint (Ollama in this instance), the LLM and the embeddings models. The web interface allows users to upload documents, using different chunking methods (Token, Sentence, Semantic, HTML, Markdown, etc.). The only RAG library supported by Verba, at the time of writing of this post, was LangChain. Also, the chat UI allows user to specify the system prompt.

The initial test consisted in downloading the NZISM PDF version, together with the CIS benchmarks for Ubuntu Linux 22.04, also a PDF document, with different chunking techniques for each test.

The results returned by the LLM were irrelevant or incorrect. This is likely caused by the data contained in the uploaded PDF documents that cannot be easily chunked in a way that allows the LLM to retrieve the relevant parts to compose the response.

LlamaIndex with Python

We decided to switch experimenting with the Python LlamaIndex libraries for embeddings and interface with the Ollama API.

For the embedding model, we chose to use nomic-embed-text (high-performing open embedding model with a large token context window).

The first test consisted in replicating the initial test using NZISM and CIS benchmark PDF, using the LlamaIndex SimpleDirectoryReader and VectorStoreIndex.from_documents functionalities.

The results indicated similar issues as the original test performed with the Verba tool: likely the quality of the data present in the PDF is not ideal for this particular use case. For this reason, we decided to move away from PDF, and rather scrape the public web version of the NZISM document, as well as manually crafting some markdown based checklists for Ubuntu 22.04 security best practices, such as filesystem configuration and SSH service configuration.

Data!

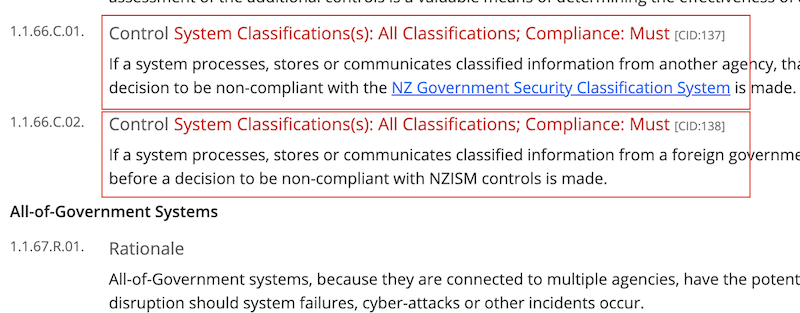

In order to improve the data quality, we decided to start scraping only short sections of the NZISM HTML page, focus on the Control sections, as shown below.

jQuery was used, loaded into the browser Developer Console, and the following JavaScript code using jQuery was executed, which allowed to extract the relevant sections:

let res = "";

$('div[id^="Paragraph-"] h5').has('small.ci').each(function() {

let h5Text = $(this).text().replace(/\s+/g, ' ').trim();

let pElement = $(this).next('p');

let pText = pElement.text().trim();

let listText = "";

let nextElement = pElement.next();

while (nextElement.is('ul, ol')) {

listText += "\n";

nextElement.find('li').each(function() {

listText += "- " + $(this).text().trim() + "\n";

});

nextElement = nextElement.next();

}

res += h5Text + "\n" + pText + listText + "--------------\n";

});

console.log(res);

The content of the extracted Control sections was saved in a text file. A bash script was then used to parse the sections and create individual files containing each of the sections, in a Markdown format and with the .md extension, such as:

## Control System Classifications(s): All Classifications; Compliance: Must [CID:127]

System owners seeking a dispensation for non-compliance with any baseline controls in this manual MUST be granted a dispensation by their Accreditation Authority.

Where High Assurance Cryptographic Systems (HACS) are implemented, the Accreditation Authority will be the Director-General GCSB or a formal delegate.Also, a couple of small samples related to CIS benchmark best practices around filesystem configuration and SSH server configuration best practices were added, such as:

## Filesystem configuration CIS benchmark recommendations

A dedicated partition needs to exist for:

* /tmp

* /var/tmp

* /var/log

The following partitions should be separate and set with the mount options noexec and nosuid:

* /tmp

* /var/tmp

The following partition should have the noexec mount option:

* /dev/shmFinal setup

A Docker container with Python 3.12.8 was used to run Ollama and the test. The following Python package were installed using pip:

qdrant_client llama_index llama-index-llms-ollama llama-index-embeddings-ollama llama-index-vector-stores-postgres llama-index-vector-stores-qdrantBelow is the Python code used for tests that:

- Parses the Markdown files from the data folder

- Stores the mathematical representation of the content to the Vector DB

- Defines the system prompt. For this, we used: "You are a security expert specialized in New Zealand Information Security Manual (NZISM) and Linux hardening. Provide concise, accurate answers based on the provided context. Do not attempt to guess the answer or information if you are not 100% sure about. Keep the answer short and to the point, but ensure to include all the details NZISM states related to the question."

- Initialises the chat engine and retrieves the relevant chunks from the Vector DB

- Initialises the prompt and waits for user input, which is then passed to the retriever to obtain the relevant chunks. The chunks are then passed to the LLM model together with the original user and system prompts to generate the answer

For this test, the temperature value was set to 0.1.

# Imports

from pathlib import Path

import time

import qdrant_client

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader, StorageContext, Settings, PromptTemplate

from llama_index.core.node_parser import SentenceSplitter

from llama_index.core.response_synthesizers import get_response_synthesizer

from llama_index.llms.ollama import Ollama

from llama_index.embeddings.ollama import OllamaEmbedding

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.core.retrievers import VectorIndexRetriever

# Variables for selecting the top 20 most relevant chunks (from Vector DB) based on the user input, and filter out chunks with a similarity score below 0.72

similarity_top_k_val = 20

similarity_threshold_value = 0.72

# Multi-line user input support

def get_multiline_input():

print("Enter your prompt (press Enter three times to finish):")

lines = []

prev_line = None

while True:

line = input()

if line == "" and prev_line == "":

break

if prev_line is not None or line != "":

lines.append(line)

prev_line = line

return "\n".join(lines).strip()

# Custom retriever with similarity threshold (filters chunks below the similarity_threshold passed as argument)

class ThresholdRetriever(VectorIndexRetriever):

def __init__(self, index, similarity_threshold=0.75, similarity_top_k=3):

super().__init__(index=index, similarity_top_k=similarity_top_k)

self.similarity_threshold = similarity_threshold

def _retrieve(self, query_bundle):

nodes = super()._retrieve(query_bundle)

filtered_nodes = [node for node in nodes if node.score >= self.similarity_threshold]

return filtered_nodes

# Initialise Qdrant client (Vector DB location)

client = qdrant_client.QdrantClient(path="./qdrant_data")

# Initialise LLM and embedding model - temperature 0.1 (low)

llm = Ollama(model="dolphin3", request_timeout=99999.0, temperature=0.1)

embed_model = OllamaEmbedding(model_name="nomic-embed-text", request_timeout=99999.0)

Settings.llm = llm

Settings.embed_model = embed_model

# Custom SentenceSplitter: max chunk size of 512, and overlap of 10

text_splitter = SentenceSplitter(chunk_size=512, chunk_overlap=10)

Settings.text_splitter = text_splitter

# Initialise vector store (Vector DB)

vector_store = QdrantVectorStore(client=client, collection_name="testdata")

storage_context = StorageContext.from_defaults(vector_store=vector_store)

# Check if the collection already exists

collection_exists = client.collection_exists("testdata")

# If the collection doesn’t exist, load documents and create the index

if not collection_exists:

print("Collection not found. Initializing...")

documents = SimpleDirectoryReader("./data", recursive=True).load_data()

index = VectorStoreIndex.from_documents(documents, storage_context=storage_context, show_progress=True)

print("Index created and data indexed.")

else: # If the collection exists, load the existing index

print("Collection found. Loading...")

index = VectorStoreIndex.from_vector_store(vector_store=vector_store, storage_context=storage_context)

print("Index loaded.")

# System prompt

system_prompt = (

"You are a security expert specialized in New Zealand Information Security Manual (NZISM) and Linux hardening. "

"Provide concise, accurate answers based on the provided context. "

"Do not attempt to guess the answer or information if you are not 100% sure about. "

"Keep the answer short and to the point, but ensure to include all the details NZISM states related to the question. "

)

# PromptTemplate (to preserve history context)

qa_prompt = PromptTemplate(

"System: {system_prompt}\n"

"Context information is below:\n"

"--------------------\n"

"{context_str}\n"

"--------------------\n"

"Conversation history:\n"

"{chat_history}\n"

"--------------------\n"

"Given the context and history, answer the following question:\n"

"Question: {query_str}\n"

)

# Create a chat engine specifying the system and PromptTemplate prompts

chat_engine = index.as_chat_engine(

chat_mode="context",

llm=llm,

text_qa_template=qa_prompt,

system_prompt=system_prompt,

similarity_top_k=similarity_top_k_val,

verbose=True

)

# Create a retriever to fetch raw chunks from Vector DB

retriever = ThresholdRetriever(index=index, similarity_threshold=similarity_threshold_value, similarity_top_k=similarity_top_k_val)

# User prompt loop

print("Type your questions below")

while True:

prompt = get_multiline_input()

if prompt.lower() == "quit":

print("Goodbye.")

break

if not prompt:

print("Enter a question")

continue

print("-" * 50)

print("*prompt*")

print(prompt)

print("-" * 50)

# Retrieve relevant chunks based on user prompt

retrieved_nodes = retriever.retrieve(prompt)

# Print retrieved chunks with scores

print("\nRetrieved Chunks:")

for i, node in enumerate(retrieved_nodes):

print(f"\nChunk {i+1} (Score: {node.score:.3f}):")

print(node.text)

print("-" * 50)

print(" ")

# Send prompt and chunks to the LLM model and get and stream the response

response = chat_engine.stream_chat(prompt)

print("\nResponse:\n")

for token in response.response_gen:

print(token, end="", flush=True)

time.sleep(0.05)

print("\n")Results

For the prompt what controls an agency MUST follow in relation to email security?, the response was:

--------------------------------------------------

*prompt*

what controls an agency MUST follow in relation to email security?

--------------------------------------------------

Retrieved Chunks:

Chunk 1 (Score: 0.764):

## Control System Classifications(s): Top Secret, Confidential, Secret; Compliance: Must [CID:1754]

Agencies MUST prevent unmarked and inappropriately marked emails being sent to intended recipients by blocking the email at the email server, originating workstation or both.

--------------------------------------------------

Chunk 2 (Score: 0.744):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1780]

Where an agency has system users that send email from outside the agency’s network, an authenticated and encrypted channel MUST be configured to allow email to be sent via the centralised email gateway.

--------------------------------------------------

Chunk 3 (Score: 0.743):

## Control System Classifications(s): Secret, Confidential, Top Secret; Compliance: Must [CID:1755]

Agencies MUST enforce protective marking of emails so that checking and filtering can take place.

--------------------------------------------------

Chunk 4 (Score: 0.742):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1774]

Agencies MUST disable open email relaying so that email servers will only relay messages destined for the agency’s domain(s) and those originating from authorised systems or users within that domain.

--------------------------------------------------

Chunk 5 (Score: 0.738):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1684]

Agencies MUST develop and implement a policy governing the use of email.

--------------------------------------------------

Chunk 6 (Score: 0.737):

## Control System Classifications(s): All Classifications; Compliance: Should [CID:1745]

Agencies SHOULD configure the following gateway filters:

* inbound and outbound email, including any attachments, that contain:

malicious code;

content in conflict with the agency’s email policy;

content that cannot be identified;

deny listed or unauthorised filetypes; and

* malicious code;

* content in conflict with the agency’s email policy;

* content that cannot be identified;

* deny listed or unauthorised filetypes; and

* encrypted content, when that content cannot be inspected for malicious code or authenticated as originating from a trusted source;

* emails addressed to internal-use only email aliases with source addresses located from outside the domain; and

* all emails arriving via an external connection where the source address uses an internal agency domain name.

--------------------------------------------------

Chunk 7 (Score: 0.734):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1726]

Agencies MUST make their system users aware of the agency’s email usage policies.

--------------------------------------------------

Chunk 8 (Score: 0.728):

## Control System Classifications(s): All Classifications; Compliance: Should [CID:1756]

Agencies SHOULD enforce protective marking of emails so that checking and filtering can take place.

--------------------------------------------------

Chunk 9 (Score: 0.728):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1771]

Agencies MUST ensure that the requirements for blocking unmarked and outbound emails are also applied to automatically forwarded emails.

--------------------------------------------------

Chunk 10 (Score: 0.728):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1764]

Agencies MUST configure email systems to reject, log and report inbound emails with protective markings indicating that the content of the email exceeds the accreditation of the receiving system.

--------------------------------------------------

Chunk 11 (Score: 0.727):

## Control System Classifications(s): All Classifications; Compliance: Must [CID:1760]

Agencies MUST configure systems to block any outbound emails with a protective marking or endorsement indicating that the content of the email exceeds the classification of the communication path.

--------------------------------------------------

Response:

Agencies must:

1. Prevent unmarked and inappropriately marked emails from being sent to intended recipients by blocking them at the email server or originating workstation.

2. Enforce protective marking of emails for checking and filtering purposes.

3. Disable open email relaying, allowing email servers to only relay messages destined for the agency's domain(s) or originating from authorized systems/users within that domain.

4. Develop and implement a policy governing the use of email.

5. Configure gateway filters to block malicious code, content in conflict with the agency's email policy, unauthorized filetypes, encrypted content, emails addressed to internal-use only aliases, and emails arriving via external connections using internal domain names.

6. Make system users aware of the agency's email usage policies.

7. Ensure that requirements for blocking unmarked and outbound emails are applied to automatically forwarded emails.

8. Configure email systems to reject, log, and report inbound emails with protective markings exceeding the accreditation of the receiving system.

9. Block any outbound emails with a protective marking or endorsement indicating that the content exceeds the classification of the communication path.

10. Implement measures to monitor personnel's compliance with email usage policies.

11. Notify intended recipients of blocked emails when possible.

12. Assess and handle unmarked emails originating from outside the government in accordance with the classification system.

13. Only send notification of undeliverable, bounced, or blocked emails to verified senders via SPF or other trusted means.

14. Implement TLS between email servers where significant volumes of classified information are passed via email to other agencies.

15. Enable MTA-STS to prevent unencrypted transfer of emails between compliant servers.

16. Encrypt aggregated agency data over insecure networks using approved algorithms and protocols when the compromise would present a significant impact to the agency.

17. Record all successful and failed logon attempts, lock accounts after three failed attempts, reset locked accounts, and remove or suspend accounts no longer needed.

These controls are based on NZISM guidelines for email security.The result matched the right controls of NZISM around email security, indicating that the correct chunks were retrieved from the Vector DB, which were used to construct the response.

Below some example of further tests:

--------------------------------------------------

*prompt*

any policies related to automatically lock the screen after few minutes of inactivity described in the NZISM document?

--------------------------------------------------

Response:

The NZISM document recommends that agencies configure systems with a session or screen lock and specifies the following requirements:

- The lock should activate after a maximum of 10 minutes or manually by the user.

- The lock should completely conceal all information on the screen.

- The screen should not turn off before the lock is activated.

- Users must reauthenticate to unlock the system.

- Disabling the locking mechanism should be prevented.

For systems processing, storing, or communicating confidential, secret, or top-secret information, agencies must follow these requirements instead of the "should" guidelines.--------------------------------------------------

*prompt*

can you tell me what different type of password policies agencies should implement to align with NZISM?

--------------------------------------------------

Response:

Agencies should implement the following types of password policies in alignment with NZISM:

1. Minimum password length: Agencies SHOULD enforce a minimum password length, which can be either 10 characters or 16 characters without complexity requirements.

2. Character sets: Passwords SHOULD consist of at least three character sets including lowercase characters (a-z), uppercase characters (A-Z), digits (0-9), and punctuation/special characters.

3. Frequency of change: Agencies SHOULD enforce a password expiration policy, requiring users to change their passwords every 90 days or less.

4. Sequential or predictable passwords: Agencies MUST NOT allow sequential or predictable reset passwords, reused passwords within eight changes, or stored passwords in the clear on the system.

5. Root direct access: Password authentication for root direct access SHOULD be disabled.

6. SSH configuration: Agencies should follow the NZISM recommended SSH server configuration security best practices, which include disabling password authentication and certain cipher suites, key exchange methods, MAC algorithms, and public key algorithms.

7. Cryptographic protocols and algorithms: Agencies MUST follow the requirements of the NZISM in the selection and implementation of cryptographic protocols and algorithms for key management (Chapter 17 - Cryptography).

8. Access control: Agencies SHOULD apply the access controls of the NZISM to access card systems (CID:3139) and ensure that passwords are changed at least every 90 days, preventing users from changing their password more than once a day, checking for compliance with password policies, and ensuring that keyword searches on all textual data are performed before releasing any classified information.--------------------------------------------------

*prompt*

when dealing with external contractors, subcontractors and suppliers what does nzism recommend to reduce risk around supply chain attacks?

--------------------------------------------------

Response:

NZISM recommends the following measures to reduce risks related to supply chain attacks when dealing with external contractors, subcontractors, and suppliers:

1. Incorporate consideration of supply chain risks into an organization-wide risk assessment and management process.

2. Require tenderers and contractors to provide information identifying any restrictions on the disclosure, transfer or use of technology arising out of export controls or security arrangements.

3. Reject contractors and subcontractors where they do not possess the necessary reliability to exclude risks to national security; or have breached obligations relating to security of information during a previous contract in circumstances amounting to grave misconduct.

4. Ensure that supply chains are transparent, with clear lines of sight and accountability throughout the supply chain.

These measures help minimize potential vulnerabilities and ensure that external contractors, subcontractors, and suppliers adhere to necessary security requirements.

--------------------------------------------------

*prompt*

anything related to products or equipment used by external contractors?

--------------------------------------------------

Response:

Yes, NZISM has several recommendations related to products or equipment used by external contractors:

1. Agencies must require suppliers and contractors to provide the provenance of any products or equipment (file_path: /data/mix/561.md).

2. For equipment that is expected to have an extended operational life in a critical system, agencies should provide for the acquisition of necessary licenses, information to produce spare parts, components, assemblies, testing equipment, and technical assistance agreements (file_path: /data/mix/319.md).

3. Agencies must include contractual obligations on all contractors and subcontractors to safeguard information throughout the tendering and contracting procedure (file_path: /data/mix/393.md).

4. All conductive elements of a cabinet, rack or case housing any equipment must be earth bonded (file_path: /data/mix/260.md).

These measures help ensure that external contractors use secure products and equipment while working with sensitive information.--------------------------------------------------

*prompt*

below is the result of the sshd -T command ran on a Linux host. Can you tell me if this SSH configuration follows NZISM best practices?

port 22

addressfamily any

listenaddress [::]:22

listenaddress 0.0.0.0:22

usepam yes

[truncated for brevity]

--------------------------------------------------

Response:

Based on the provided SSH configuration output and comparing it against NZISM best practices, here are some observations:

1. **Password Authentication**: The configuration states `passwordauthentication no`, which aligns with the NZISM best practice of disabling password authentication.

2. **Root Direct Access**: The option `permitrootlogin without-password` indicates that root login is not allowed with a password but might be possible using other authentication methods (e.g., public key). This partially aligns with the NZISM recommendation to disallow root access, although it does not explicitly state "without-password" in the best practices.

3. **X11 Forwarding**: The configuration states `x11forwarding no`, which matches the NZISM best practice of disabling X11 forwarding.

4. **TCP Port Forwarding**: The option `AllowTcpForwarding yes` is set, which contradicts the NZISM best practice to disable TCP port forwarding (`AllowTcpForwarding no`). This suggests a deviation from recommended security practices.

5. **Cipher Suites and Key Exchange Methods**: The configuration lists several cipher suites and key exchange methods that are not explicitly mentioned in the provided NZISM context as being disabled. However, it does not list any of the specific ciphers or key exchange methods that NZISM recommends disabling. Without a direct comparison to the recommended disabled options from NZISM (e.g., aes128-cbc, ecdh-sha2-nistp256), we cannot confirm full alignment here.

6. **MAC Algorithms**: The configuration lists several MAC algorithms (`umac-64-etm@openssh.com`, `umac-128-etm@openssh.com`, etc.) that are not directly referenced in the NZISM context provided. Without a clear mapping to the recommended disabled MAC algorithms from NZISM, we cannot confirm alignment here.

7. **Public Key Algorithms**: The configuration does not explicitly disable any public key algorithms as suggested by the NZISM best practices (e.g., disabling `ssh-rsa`). It lists `pubkeyauthentication yes`, indicating that public key authentication is allowed but does not specify which algorithms are enabled or disabled.

8. **Banner**: The configuration states `banner none`, aligning with the NZISM best practice of specifying a banner, albeit in a way that suggests no banner will be shown (`Banner /etc/path_to_banner*` was mentioned as a recommendation).

9. **Chroot Directory and Trusted User Keys**: The configuration shows `chrootdirectory none` and `trustedusercakeys none`, which aligns with the NZISM best practice of not specifying a chroot directory or trusted user keys.

10. **Logging and Security Level**: The configuration sets `loglevel INFO` and `syslogfacility AUTH`, which are general settings that do not directly contradict any NZISM best practices mentioned in the provided context.

11. **Authorized Keys and Host Keys**: The configuration lists authorized_keys files and host keys, aligning with typical SSH server configurations but without specific reference to NZISM recommendations for these settings.

12. **Authentication Methods**: The configuration states `authenticationmethods publickey,password publickey,keyboard-interactive`, which suggests that multiple authentication methods are allowed (`publickey` and `password`). However, the NZISM best practice emphasizes disabling password authentication, indicating a potential misalignment here.

Based on this analysis, while some aspects of the SSH configuration align with NZISM best practices, others deviate from them. For example, enabling TCP port forwarding and allowing multiple authentication methods (including password) contradict specific NZISM recommendations. Without direct access to the full NZISM document for comparison, it's challenging to provide a comprehensive assessment. However, based on the provided context, there are notable areas where the configuration does not fully align with NZISM best practices.--------------------------------------------------

*prompt*

does this Linux filesystem configuration aligns with CIS benchmarks?

--------------------------------------------------

/dev/xvda1 on / type ext4 (rw,relatime,discard,errors=remount-ro)

devtmpfs on /dev type devtmpfs (rw,nosuid,noexec,relatime,size=482524k,nr_inodes=120631,mode=755,inode64)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,size=196084k,nr_inodes=819200,mode=755,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime,nsdelegate,memory_recursiveprot)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=29,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=1725)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

ramfs on /run/credentials/systemd-sysusers.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

/var/lib/snapd/snaps/lxd_29351.snap on /snap/lxd/29351 type squashfs (ro,nodev,relatime,errors=continue,threads=single,x-gdu.hide)

/dev/xvda15 on /boot/efi type vfat (rw,relatime,fmask=0077,dmask=0077,codepage=437,iocharset=iso8859-1,shortname=mixed,errors=remount-ro)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,nosuid,nodev,noexec,relatime)

tmpfs on /run/snapd/ns type tmpfs (rw,nosuid,nodev,size=196084k,nr_inodes=819200,mode=755,inode64)

nsfs on /run/snapd/ns/lxd.mnt type nsfs (rw)

tmpfs on /run/user/1001 type tmpfs (rw,nosuid,nodev,relatime,size=98040k,nr_inodes=24510,mode=700,uid=1001,gid=1001,inode64)

--------------------------------------------------

Response:

The provided Linux filesystem configuration does not fully align with CIS benchmarks. The following partitions are missing the required mount options:

* /tmp should have nosuid and noexec mount options.

* /var/tmp should have nosuid and noexec mount options.

* /dev/shm should have noexec mount option.

Additionally, separate partitions for /tmp, /var/tmp, and /dev/shm are recommended.

Overall, while the results indicated that the responses are mainly accurate, the data used to build the Vector DB is purely based on the control sections of the document only.

This can be likely improved by scraping larger sections of the document related to the same control, rather than individual control sections, and by increasing the chunk size and overlap settings. For the sake of researching the feasibility of building some automation based on LLMs and RAGs, we concluded that this can be achieved. The quality of the data is quite important when building the RAG component. Breaking down the data to a more structured format containing only the relevant sections improved the results quite significantly.

Vast AI

While testing on a local Ollama instance on consumer hardware, having a LLM model relatively small, such as the dolphin3 8B parameters used in this small research, is great for testing and experimenting. The more data is added to the Vector DB, without dedicated hardware (GPU), the slower the LLM can process the chunks and respond to the user prompt.

Vast AI is a good platform for experimenting with this setup, though we recommend investing in your own GPU if sensitive data is involved.

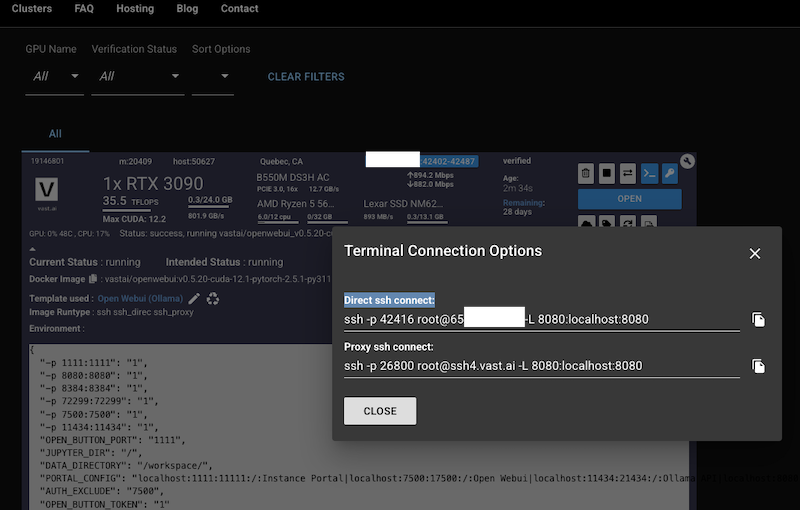

To test using this 8B parameters model, we chose an instance with a single RTX 3090 model GPU, and 13 GB of storage. The total cost at the time of writing of this post was around 0.18$ (US dollars). If you choose to run a larger model, such as 70B parameters models, instances with two RTX 4090 are recommended, which cost around 0.5$. Several pre-defined templates exist. For this test, we chose the Open Webui (Ollama) template.

The screenshot below shows the Vast AI interface when spinning up the instance.

With the instance up and running, we accessed it with SSH and mapped the Ollama port to our local host (ssh -N -L 11434:127.0.0.1:21434 -p 42416 root@instance_IP).

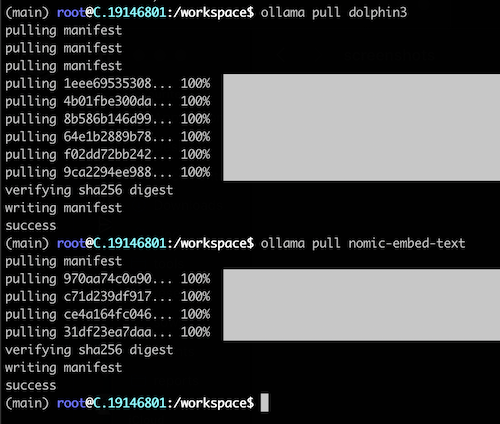

Once connected, we downloaded the required models on the instance, as shown below.

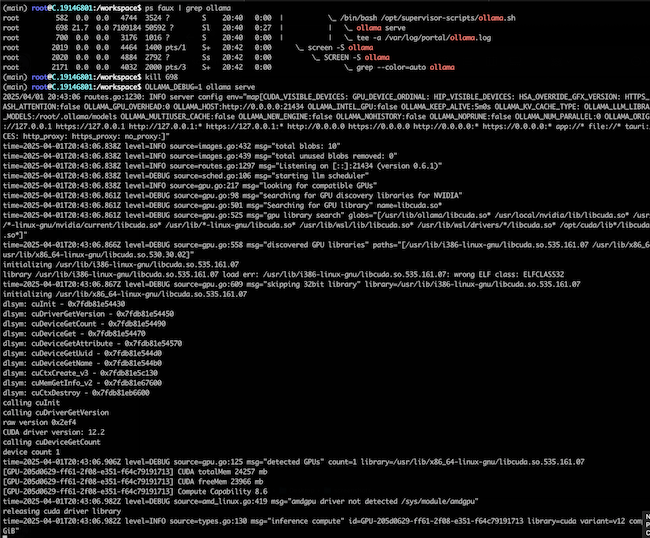

Also, we decided to kill the existing running Ollama process, and restart it with debugging enabled.

The results, as displayed by the video below, indicated that using a GPU made a significant difference in response time.

Conclusion

While this quick research showed LLMs can help automating certain tasks, the quality of the data seems to be the key. To further improve this NZISM example, the next step we would take is scraping and preparing the data in different ways. For example, create a markdown for each NZISM sections to include both the rationale and the controls.

This research allowed us to determine what type of automation we can start considering building using these new technologies. If you work in offensive security, we would love to hear from you around similar research and experiments you might have conducted.

Claudio Contin - Principal Consultant

Claudio Contin - Principal Consultant